Kevin Carey - Decoding the Value of Computer Science

Kevin Carey - Decoding the Value of Computer Science

Here’s a Chronicle of Higher Education story illustrating two of my obsession points:

There, at the Indiana state-budget office, my role was to calculate how proposals for setting local school property-tax rates and distributing funds would play out in the state’s 293 school districts. I did this by teaching myself the statistical program SAS. The syntax itself was easy, since the underlying logic wasn’t far from Pascal. But the only way to simulate the state’s byzantine school-financing law was to understand every inch of it, every historical curiosity and long-embedded political compromise, to the last dollar and cent. To write code about a thing, you have to know the thing itself, absolutely.

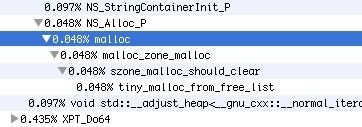

Over time I became mildly obsessed with the care and feeding of my SAS code. I wrote and rewrote it with the aim of creating the simplest and most elegant procedure possible, one that would do its job with a minimum of space and time. It wasn’t that someone was bothering me about computer resources. It just seemed like the right thing to do.

Eventually I reached the limit of how clean my code could be. Yet I was unsatisfied. Parts still seemed vestigial and wrong. I realized the problem wasn’t my modest programming skills but the law itself, which had grown incrementally over the decades, each budget bringing new tweaks and procedures that were layered atop the last.

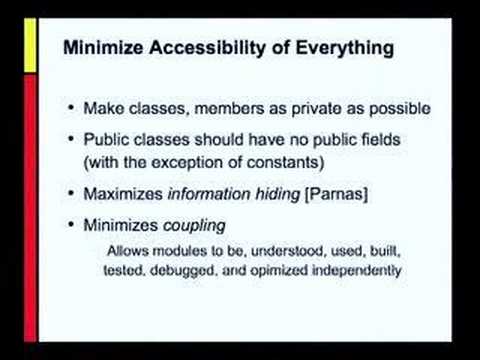

A good Computer Scientist, like anyone else who is good at something, is motivated not just by a desire to get things done, but a desire to get it done the right way. Of course, just because this desire exists, that doesn’t mean it can be realized. Because of this, moral suasion is the best way to lead the good. Those who are bad will eventually just copy the good, because they are too foolish to figure out how to do something creative.

So I sat down, mostly as an intellectual exercise, to rewrite the formula from first principles. The result yielded a satisfyingly direct SAS procedure. Almost as an afterthought, I showed it to a friend who worked for the state legislature. To my surprise, she reacted with enthusiasm, and a few months later the new financing formula became law. Good public policy and good code, it turned out, go hand in hand. The law has reaccumulated some extraneous procedures in the decade since. But my basic ideas are still there, directing billions of dollars to schoolchildren using language recorded in, as state laws are officially named, the Indiana Code.

The other point this illustrates is that systems will evolve in the direction of the tools with which they are maintained. The Indiana Code was created to bring the law into line with the ideal program, even though there already existed a less-than-ideal program in line with law. Because this effect exists so powerfully, we must be careful about how we arrange our systems.

Via llimllib